Whether digital sentience is possible would seem to matter greatly for our priorities, and so gaining even slightly more refined views on this matter could be quite valuable. Many people appear to treat the possibility, if not indeed the imminence, of digital sentience as a foregone conclusion. David Pearce, in contrast, is skeptical.

Pearce has written and spoken elaborately about his views on consciousness. My sense, however, is that these expositions do not always manage to clearly convey the core, and actually very simple reasons underlying Pearce’s skepticism of digital sentience. The aim of this conversation is to probe Pearce so as to shed greater — or perhaps most of all simpler — light on why he is skeptical, and thus to hopefully advance the discussions on this issue among altruists working to reduce future suffering.

MV: You are skeptical about the possibility of digital sentience. Could you explain why, in simple terms?

DP: Sure. Perhaps we can start by asking why so many people believe that our machines will become conscious (cf. https://www.hedweb.com/quora/2015.html#definition). Consciousness is widely recognised to be scientifically unexplained. But the computer metaphor of mind seems to offer us clues (cf. https://www.hedweb.com/quora/2015.html#braincomp). As far as I can tell, many if not most believers in digital sentience tend to reason along the following lines. Any well-defined cognitive task that the human mind can perform could also be performed by a programmable digital computer (cf. https://en.wikipedia.org/wiki/Turing_machine). A classical Turing machine is substrate-neutral. By “substrate-neutral”, we mean that whether a Turing machine is physically constituted of silicon or carbon or gallium oxide (etc) makes no functional difference to the execution of the program it runs. It’s commonly believed that the behaviour of a human brain can, in principle, be emulated on a classical Turing machine. Our conscious minds must be identical with states of the brain. If our minds weren’t identical with brain states, then dualism would be true (cf. https://www.hedweb.com/quora/2015.html#dualidealmat). Therefore, the behaviour of our minds can in principle be emulated by a digital computer. Moreover, the state-space of all possible minds is immense, embracing not just the consciousness of traditional and enhanced biological lifeforms, but also artificial digital minds and maybe digital superintelligence. Accordingly, the belief that non-biological information-processing machines can’t support consciousness is arbitrary. It’s unjustified carbon chauvinism.

I think most believers in digital sentience would recognise that the above considerations are not a rigorous argument for the existence of inorganic machine consciousness. The existence of machine consciousness hasn’t been derived from first principles. The “explanatory gap” is still unbridged. Yet what is the alternative?

Well, as a scientific rationalist, I’m an unbeliever. Digital computers and the software they run are not phenomenally-bound subjects of experience (cf. https://www.binding-problem.com/). Ascribing sentience to digital computers or silicon robots is, I believe, a form of anthropomorphic projection — a projection their designers encourage by giving their creations cutesy names (“Watson”, “Sophia”, “Alexa” etc).

Before explaining my reasons for believing that digital computers are zombies, I will lay out two background assumptions. Naturally, one or both assumptions can be challenged, though I think they are well-motivated.

The first background assumption might seem scarcely relevant to your question. Perpetual direct realism is false (cf. https://www.hedweb.com/quora/2015.html#distort). Inferential realism about the external world is true. The subjective contents of your consciousness aren’t merely a phenomenally thin and subtle serial stream of logico-linguistic thought-episodes playing out behind your forehead, residual after-images when you close your eyes, inner feelings and emotions and so forth. Consciousness is also your entire phenomenal world-simulation — what naïve realists call the publicly accessible external world. Unless you have the neurological syndromes of simultanagnosia (the inability to experience more than one object at once) or akinetopsia (“motion blindness”), you can simultaneously experience a host of dynamic objects — for example, multiple players on a football pitch, or a pride of hungry lions. These perceptual objects populate your virtual world of experience from the sky above to your body-image below. Consciousness is all you directly know. The external environment is an inference, not a given.

Let’s for now postpone discussion of how our skull-bound minds are capable of such an extraordinary feat of real-time virtual world-making. The point is that if you couldn’t experience multiple feature-bound phenomenal objects — i.e. if you were just an aggregate of 86 billion membrane-bound neuronal “pixels” of experience — then you’d be helpless. Compare dreamless sleep. Like your enteric nervous system (the “brain-in-the-gut”), your mind-brain would still be a fabulously complex information-processing system. But you’d risk starving to death or getting eaten. Waking consciousness is immensely adaptive. (cf. https://www.hedweb.com/quora/2015.html#evolutionary). Phenomenal binding is immensely adaptive (cf. https://www.hedweb.com/quora/2015.html#purposecon).

My second assumption is physicalism (cf. https://www.hedweb.com/quora/2015.html#materialism). I assume the unity of science. All the special sciences (chemistry, molecular biology etc) reduce to physics. In principle, the behaviour of organic macromolecules such as self-replicating DNA can be described entirely in the mathematical language of physics without mentioning “life” at all, though such high-level description is convenient. Complications aside, no “element of reality” is missing from the mathematical formalism of our best theory of the world, quantum mechanics, or more strictly from tomorrow’s unification of quantum field theory and general relativity.

One corollary of physicalism is that only “weak” emergence is permissible. “Strong” emergence is forbidden. Just as the behaviour of programs running on your PC supervenes on the behaviour of its machine code, likewise the behaviour of biological organisms can in principle be exhaustively reduced to quantum chemistry and thus ultimately to quantum field theory. The conceptual framework of physicalism is traditionally associated with materialism. According to materialism as broadly defined, the intrinsic nature of the physical — more poetically, the mysterious “fire” in the equations — is non-experiential. Indeed, the assumption that quantum field theory describes fields of insentience is normally treated as too trivially obvious to be worth stating explicitly. However, this assumption of insentience leads to the Hard Problem of consciousness. Non-materialist physicalism (cf. https://www.hedweb.com/quora/2015.html#galileoserror) drops this plausible metaphysical assumption. If the intrinsic nature argument is sound, there is no Hard Problem of consciousness: it’s the intrinsic nature of the physical (cf. https://www.hedweb.com/quora/2015.html#definephysical ). However, both “materialist” physicalists and non-materialist physicalists agree: everything that happens in the world is constrained by the mathematical straitjacket of modern physics. Any supposedly “emergent” phenomenon must be derived, ultimately, from physics. Irreducible “strong” emergence would be akin to magic.

Anyhow, the reason I don’t believe in digital minds is that classical computers are, on the premises outlined above, incapable of phenomenal binding. If we make the standard assumption that their 1 and 0s and logic gates are non-experiential, then digital computers are zombies. Less obviously, digital computers are zombies if we don’t make this standard assumption! Imagine, fancifully, replacing non-experiential 1s and 0s of computer software with discrete “pixels” of experience. Run the program as before. The upshot will still be a zombie, more technically a micro-experiential zombie. What’s more, neither increasing the complexity of the code nor exponentially increasing the speed of its execution could cause discrete “pixels” somehow to blend into each other in virtue of their functional role, let alone create phenomenally-bound perceptual objects or a unitary self experiencing a unified phenomenal world. The same is true of a connectionist system (cf. https://en.wikipedia.org/wiki/Connectionism), supposedly more closely modelled on the brain — however well-connected and well-trained the network, and regardless whether its nodes are experiential or non-experiential. The synchronous firing of distributed feature-processors in a “trained up” connectionist system doesn’t generate a unified perceptual object — again on pain of “strong” emergence. AI programmers and roboticists can use workarounds for the inability of classical computers to bind, but they are just that: workarounds.

Those who believe in digital sentience can protest that we don’t know that phenomenal minds can’t emerge at some level of computational abstraction in digital computers. And they are right! If abstract objects have the causal power to create conscious experience, then digital computer programs might be subjects of experience. But recall we’re here assuming physicalism. If physicalism is true, then even if consciousness is fundamental to the world, we can know that digital computers are — at most — micro-experiential zombies.

Of course, monistic physicalism may be false. “Strong” emergence may be real. But if so, then reality seems fundamentally lawless. The scientific world-picture would be false.

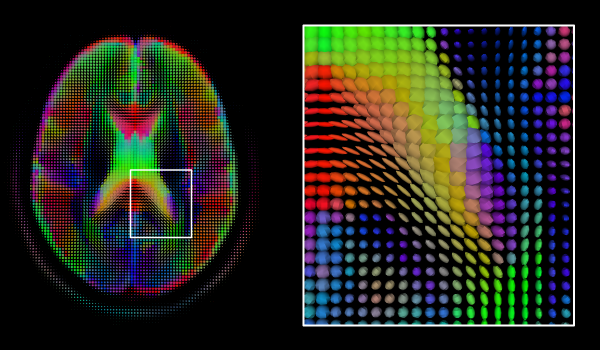

Yet how do biological minds routinely accomplish binding if phenomenal binding is impossible for any classical digital computer (cf. https://en.wikipedia.org/wiki/Universal_Turing_machine). Even if our neurons support rudimentary “pixels” of experience, why aren’t animals like us in the same boat as classical digital computers or classically parallel connectionist systems?

I can give you my tentative answer. Naïvely, it’s the reductio ad absurdum of quantum mind: “Schrödinger’s neurons”: https://www.hedweb.com/quora/2015.html#quantumbrain.

Surprisingly, it’s experimentally falsifiable via interferometry: https://en.wikipedia.org/wiki/Quantum_mind#David_Pearce

Yet the conjecture I explore may conceivably be of interest only to someone who already feels the force of the binding problem. Plenty of researchers would say it’s a ridiculous solution to a nonexistent problem. I agree it’s crazy; but it’s worth falsifying. Other researchers just lump phenomenal binding together with the Hard Problem (cf. https://www.hedweb.com/quora/2015.html#categorize) as one big insoluble mystery they suppose can be quarantined from the rest of scientific knowledge.

I think their defeatism and optimism alike are premature.

MV: Thanks, David. A lot to discuss there, obviously.

Perhaps the most crucial point to really appreciate in order to understand your skepticism is that you are a strict monist about reality. That is, “the experiential” is not something over and above “the physical”, but rather identical with it (which, to be clear, does not imply that all physical things have minds, or complex experiences). And so if “the mental” and “the physical” are essentially the same ontological thing, or phenomenon, under two different descriptions, then there must, roughly speaking, also be a match in terms of their topological properties.

As Mike Johnson explained your view: “consciousness is ‘ontologically unitary’, and so only a physical property that implies ontological unity … could physically instantiate consciousness.” (Principia Qualia, p. 73). (Note that “consciousness” here refers to an ordered, composite mind; not phenomenality more generally.)

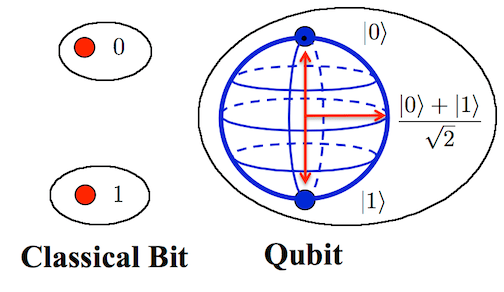

Conversely, a system that is physically discrete or disconnected — say, a computer composed of billiard balls that bump into each other, or lighthouses that exchange signals across hundreds of kilometers — could not, on your view, support a unitary mind. In terms of the analogy of thinking about consciousness as waves, your view is roughly that we should think of a unitary mind as a large, composite wave of sorts, akin to a song, whereas disconnected “pixels of experience” are like discrete microscopic proto-waves, akin to tiny disjoint blobs of sound. (And elsewhere you quote Seth Lloyd saying something similar about classical versus quantum computations: “A classical computation is like a solo voice — one line of pure tones succeeding each other. A quantum computation is like a symphony — many lines of tones interfering with one another.”)

This is why you say that “computer software with discrete ‘pixels’ of experience will still be a micro-experiential zombie”, and why you say that “even if consciousness is fundamental to the world, we can know that digital computers are at most micro-experiential zombies” — it’s because of this physical discreteness, or “disconnectedness”.

And this is where it seems to me that the computational view of mind is also starkly at odds with common sense, as well as with monism. For it seems highly counterintuitive to claim that billiard balls bumping into each other, or lighthouses separated by hundreds of kilometers that exchange discrete signals, could, even in principle, mediate a unitary mind. I wonder whether most people who hold a computational view of mind are really willing to bite this bullet. (Such views have also been elaborately criticized by Mike Johnson and Scott Aaronson — critiques that I have seen no compelling replies to.)

It also seems non-monistic in that it appears impossible to give a plausible account of where a unitary mind is supposed to be found in this picture (e.g. in a picture with discrete computations occurring serially over long distances), except perhaps as a separate, dualist phenomenon that we somehow map onto a set of physically discrete computations occurring over time, which seems to me inelegant and unparsimonious. Not to mention that it gives rise to an explosion of minds, as we can then see minds in a vast set of computations that are somehow causally connected across time and space, with the same computations being included in many distinct minds. This picture is at odds with a monist view that implies a one-to-one correspondence between concrete physical state and concrete mental state — or rather, which sees these two sides as distinct descriptions of the exact same reality.

The question is then how phenomenal binding could occur. You explore a quantum mind hypothesis involving quantum coherence. So what are your reasons for thinking that quantum coherence is necessary for phenomenal binding? Why would, say, electromagnetic fields in a synchronous state not be enough?

DP: If the phenomenal unity of mind is an effectively classical phenomenon, then I have no idea how to derive the properties of our phenomenally bound minds from decohered, effectively classical neurons — not even in principle, let alone in practice.

MV: And why is that? What is it that makes deriving the properties of our phenomenally bound minds seem feasible in the case of coherent states, unlike in the case of decohered ones?

DP: Quantum coherent states are individual states — i.e. fundamental physical features of the world — not mere unbound aggregates of classical mind-dust. On this story, decoherence (cf. https://arxiv.org/pdf/1911.06282.pdf) explains phenomenal unbinding.

MV: So it is because only quantum coherent states could constitute the “ontological unity” of a unitary, “bound” mind. Decoherent states, on your view, are not and could not be ontologically unitary in the required sense?

DP: Yes!

Digital computing depends on effectively classical, decohered individual bits of information, whether as implemented in Turing’s original tape set-up, a modern digital computer, or indeed if the world’s population of skull-bound minds agree to participate in an experiment to see if a global mind can emerge from a supposed global brain.

One can’t create perceptual objects, let alone unified minds, from classical mind-dust even if strictly the motes of decohered “dust” are only effectively classical, i.e. phase information has leaked away into the environment. If the 1s and 0s of a digital computer are treated as discrete micro-experiential pixels, then when running a program, we don’t need to consider the possibility of coherent superpositions of 1s and 0s/ micro-experiences. If the bits weren’t effectively classical and discrete, then the program wouldn’t execute.

MV: In other words, you are essentially saying that binding/unity between decohered states is ultimately no more tenable than binding/unity between, say, two billard balls separated by a hundred miles? Because they are in a sense similarly ontologically separate?

DP: Yes!

MV: So to summarize, your argument is roughly the following:

- observed phenomenal binding, or a unitary mind, combined with

- an empirically well-motivated monistic physicalism, means that

- we must look for a unitary physical state as the “mediator”, or rather the physical description, of mind [since the ontological identity from (2) implies that the phenomenal unity from (1) must be paralleled in our physical description], and it seems that

- only quantum coherent states could truly fit the bill of such ontological unity in physical terms.

DP: 1 to 4, yes!

MV: Cool. And in step 4 in particular, to spell that out more clearly, the reasoning is roughly that classical states are effectively (spatiotemporally) serial, discrete, disconnected, etc. Quantum coherent states, in contrast, are a connected, unitary, individual whole.

Classical bits in a sense belong to disjoint “ontological sets”, whereas qubits belong to the same “ontological set” (as I’ve tried to illustrate somewhat clumsily below, and in line with Seth Lloyd’s quote above).

Is that a fair way to put it?

DP: Yes!

I sometimes say who will play Mendel to Zurek’s Darwin is unknown. If experience discloses the intrinsic nature of the physical, i.e. if non-materialist physicalism is true, then we must necessarily consider the nature of experience at what are intuitively absurdly short timescales in the CNS. At sufficiently fine-grained temporal resolutions, we can’t just assume the existence of decohered macromolecules, neurotransmitters, receptors, membrane-bound neurons etc. — they are weakly emergent, dynamically stable patterns of “cat states”. These high-level patterns must be derived from quantum bedrock — which of course I haven’t done. All I’ve done is make a “philosophical” conjecture that (1) quantum coherence mediates the phenomenal unity of our minds; and (2) quantum Darwinism (cf. https://www.sciencemag.org/news/2019/09/twist-survival-fittest-could-explain-how-reality-emerges-quantum-haze) offers a ludicrously powerful selection-mechanism for sculpting what would otherwise be mere phenomenally-bound “noise”.

MV: Thanks for that clarification.

I guess it’s also worth stressing that you do not claim this to be any more than a hypothesis, while you at the same time admit that you have a hard time seeing how alternative accounts could explain phenomenal binding.

Moreover, it’s worth stressing that the conjecture resulting from your line of reasoning above is in fact, as you noted, a falsifiable one — a rare distinction for a theory of consciousness.

A more general point to note is that skepticism about digital sentience need not be predicated on the conjecture you presented above, as there are other theories of mind — not necessarily involving quantum coherence — that also imply that digital computers are unable to mediate a conscious mind (including some of the theories hinted at above, and perhaps other, more recent theories). For example, one may accept steps 1-3 in the argument above, and then be more agnostic in step 4, with openness to the possibility that binding could be achieved in other ways, yet while still considering contemporary digital computers unlikely to be able to mediate a unitary mind (e.g. because of the fundamental architectural differences between such computers and biological brains).

Okay, having said all that, let’s now move on to a slightly different issue. Beyond digital sentience in particular, you have also expressed skepticism regarding artificial sentience more generally (i.e. non-digital artificial sentience). Can you explain the reasons for this skepticism?

DP: Well, aeons of posthuman biological minds probably lie ahead. They’ll be artificial — genetically rewritten, AI-augmented, most likely superhumanly blissful, but otherwise inconceivably alien to Darwinian primitives. My scepticism is about the supposed emergence of minds in classical information processors — whether programmable digital computers, classically parallel connectionist systems or anything else.

What about inorganic quantum minds? Well, I say a bit more e.g. here: https://www.hedweb.com/quora/2015.html#nonbiological

A pleasure-pain axis has been so central to our experience that sentience in everything from worms to humans is sometimes (mis)defined in terms of the capacity to feel pleasure and pain. But essentially, I see no reason to believe that such (hypothetical) phenomenally bound consciousness in future inorganic quantum computers will support a pleasure-pain axis any more than, say, the taste of garlic.

In view of our profound ignorance of physical reality, however, I’m cautious: this is just my best guess!

MV: Interesting. You note that you see no reason to believe that such systems would have a pleasure-pain axis. But what about the argument that pain has proven exceptionally adaptive over the course of biological evolution, and might thus plausibly prove adaptive in future forms of evolution as well (assuming things won’t necessarily be run according to civilized values)?

DP: Currently, I can’t see any reason to suppose hedonic tone (or the taste of garlic) could be instantiated in inorganic quantum computers. If (a big “if”) the quantum-theoretic version of non-materialist physicalism is true, then subjectively it’s like something to be an inorganic quantum computer, just as it’s like something subjectively to be superfluid helium — a nonbiological macro-quale. But out of the zillions of state-spaces of experience, why expect the state-space of phenomenally-bound experience that inorganic quantum computers hypothetically support will include hedonic tone? My guess is that futuristic quantum computers will instantiate qualia for which humans have no name nor conception and with no counterpart in biological minds.

All this is very speculative! It’s an intuition, not a rigorous argument.

MV: Fair enough. What then is your view of hypothetical future computers built from biological neurons?

DP: Artificial organic neuronal networks are perfectly feasible. Unlike silicon-based “neural networks” — a misnomer in my view — certain kinds of artificial organic neuronal networks could indeed suffer. Consider the reckless development of “mini-brains”.

MV: Yeah, it should be uncontroversial that such developments entail serious risks.

Okay, David. What you have said here certainly provides much food for thought. Thanks a lot for patiently exploring these issues with me, and not least for all your work and your dedication to reducing the suffering of all sentient beings.

DP: Thank you, Magnus. You’re very kind. May I just add a recommendation? Anyone who hasn’t yet done so should read your superb Suffering-Focused Ethics (2020).